The scene has changed a lot since we first started doing conversion optimization back in 2006.

At that time, many marketers and C-suite executive were not convinced that there is a lot they can do about their website conversion rate. Things are much different nowadays with many companies attempting to deploy AB tests on their websites.

However, the majority of companies continue to primarily invest in visitor-driving activities and set lower budgets for converting visitors into customers.

Invesp’s 2017 state of the conversion rate optimization survey showed that majority of companies are allocating less than 8% of their digital marketing budgets to conversion rate optimization.

If your company is starting with A/B or multivariate testing, the following guide will help you avoid 16 common mistakes we have seen companies fall into during their first years of testing.

1. Testing the wrong page

If you have a website with a large number of pages, a large number of visitors and a large number of conversions, then the possibilities are endless.

For an e-commerce website, you can start your conversion program by focusing at the top of the funnel pages, such as the homepage or category pages. You can also start at the bottom of the funnel pages, such as the cart or checkout pages.

If you are a lead generation website, you can start by optimizing your homepage, landing pages, or contact pages.

When you choose the wrong page, you invest time and money in a page that might not have a real impact on your bottom line. Surprisingly, many companies continue to pay little attention to the importance of selecting the right page to perform a split test on.

We covered creating a prioritized research opportunities list in a previous chapter.

Our research opportunities list for different CRO projects contains on average anywhere from 150 to 250 items on it. This list is very powerful in guiding your CRO work. You will no longer pick random pages and random items to test. Every test you create is packed up by research.

2. Testing without creating visitor personas

Testing gives your visitors a voice in your website design process. It validates what works on your site and what does not. But, before you start testing, you must understand your visitors at an intimate level to create split tests that appeal to them.

We discussed the process of creating personas in several of our webinars and wrote about it in our book Conversion Optimization: The Art and Science of Converting Prospects to Customers.

Most companies have a decent knowledge of their target market. The challenge is how to convey that knowledge into actionable marketing insights on your website.

Personas play a crucial role in this process.

Let’s say you are an e-commerce website that sells gift baskets online. You have worked with your marketing team to define two different segments of your target market:

- B2C segment: white females, age 38 to 48, college educated, with annual income above $75,000. Your average order value for this segment is $125.

- B2B segment: corporate clients, with the purchase decision made by an executive. These companies are generating between 10 to 50 million dollars in annual revenue. Your average order value for this segment is $930.

These two segments provide a typical format of market segmentation and raise the central question of marketing design.

How can you design your website to appeal to these two distinctly different segments?

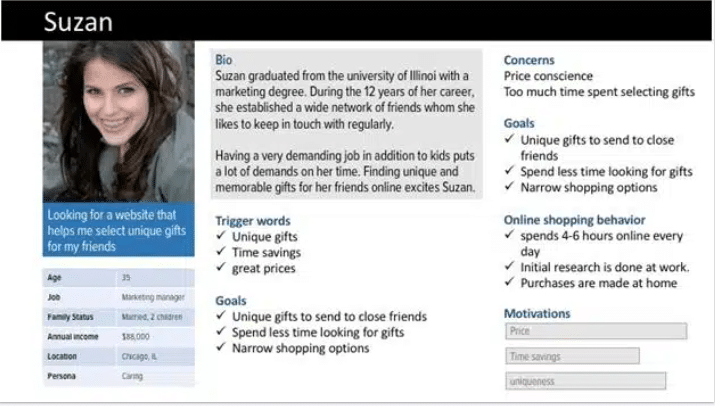

Creating personas will help you identify with each of the segments at more detailed level. At the end of the persona creation process for this website, you could end up with eight different personas. Let’s take one of them as an example:

As you can see, Suzan resembles your target market. However, she removes the abstract nature of the marketing data. As you start designing different sections of the website, you will be thinking of Suzan and how she would react to them.

You might be thinking to yourself, “this is all great, but how does that impact my testing?”

Proper and successful testing uses personas in creating design variations that challenge your existing baseline (control).

How would you create a homepage test when you are thinking of Suzan?

- She is a caring persona, so you can test different headlines that appeal to her

- She is looking for unique gifts, so you can test different designs that emphasize the uniqueness of the products you offer

- Price is an essential motivation for Suzan, so you can test different designs that emphasize pricing

3. Testing without a hypothesis

A testing hypothesis is a predictive statement about possible problems on a webpage, and the impact that fixing them may have on your KPI.

In our experience, there are two different types of issues companies run into when it comes to creating hypotheses for different tests:

- Many testers dismiss hypothesis as a luxury. So, they create a test that generates results (positive or negative), but when you ask them about the rationale behind the test, they cannot explain it.

- Others understand the importance of hypothesis but they create meaningless statements that do not have a real impact on testing.

Getting disciplined about creating test hypotheses will magnify the impact of your test results.

How do you come up with a test hypothesis in the first place?

Each conversion problems on your website should include three different elements in it:

- A problem statement

- How the problem was identified

- An initial hypothesis on how to fix the problem

As discussed previously, you will have to solidify your initial hypothesis and create a concrete hypothesis.

Of course, you can always ignore the process, throw things at the wall, and pray that one of your new designs will beat the control. And yes, it might work some of the time. But it will not work most of the time. And it will indeed never work if you are looking for repeatable and sustainable results.

4. Not considering mobile traffic

Many websites are getting a higher percentage of their website traffic on mobile devices. Most of our European clients are reporting anywhere that 50 to 70% of their traffic arriving at the site using a mobile device.

You can only expect these numbers to grow over the next few years.

So, what should you do?

- Determine the percentage of your website traffic that uses mobile devices for browsing

- Determine the top ten devices visitors are using to browse your website

- Evaluate the behavior of mobile traffic compared to desktop traffic for all of the website funnels

These three steps should give you a lot of action points to take on your website.

Let’s see an example:

| Source | Medium | Device | CategoryTo Product | ProductTo Cart | Cart to checkout | |

| 1 | Organic | Desktop | 40% | 18% | 40% | |

| Organic | Mobile | 43% | 18% | 22% | ||

| 2 | Paid | Desktop | 31% | 15% | 33% | |

| Paid | Mobile | 35% | 13% | 18% | ||

| 3 | Bing | Paid | Desktop | 48% | 22% | 44% |

| Paid | Mobile | 52% | 22% | 25% | ||

| 4 | Paid | Desktop | 32% | 14% | 37% | |

| Paid | Mobile | 36% | 13% | 18% | ||

| 5 | Internal | Desktop | 50% | 35% | 30% | |

| Internal | Mobile | 55% | 33% | 18% |

For a CRO expert, the data above provides a wealth of information. When examining the flow from category to product pages, you will notice that Bing paid traffic outperforms all other types of paid traffic. It only comes second to the email campaigns. On the other hand, Facebook paid traffic underperforms compared to other channels.

If you consider the category to product flow, mobile traffic outperforms desktop for all traffic sources and mediums. This indicates that category page design for mobile is acceptable to users. However, notice the drop in mobile performance when visitors get to product pages.

Things get even worse when we start evaluating mobile checkout. The numbers are telling us that the mobile checkout has much higher abandonment rates compared to desktop.

The real investigation begins here. Why is it that mobile checkout underperforms?

Is it a problem with the website design or usability? Or is it the case that visitors use their mobile devices to browse the website and then when they are ready to place an order, they use their desktops?

5. Mobile vs desktop experience: the aftermath of testing

What brings visitors to your website on a mobile device is vastly different from what brings them to your website on a desktop. And that is the reason you should run separate mobile and desktop tests on different pages of your website. That is a no-brainer.

But, how do you handle instances where a winning design for a mobile test on a particular page is very different from the winning design for a desktop expierement for that same page?

If you are lucky, your technical team is able to serve two completely different experiences to website visitors based on the type of device they are using to navigate. Having two different designs (mobile and desktop) for the same page can cause major development problems. And as a result, we have seen companies use a single layout for both desktop and mobile.

That does not solve the issue, it ignores it. So, do not do that. Discuss your options with your technical team and figure out a way to handle this issue.

6. Not running separate test for new vs. returning visitors

Returning visitors are loyal to your site. They are used to it with all of its conversion problems!

Humans are creatures of habit. In many instances, we find that returning visitors convert at a lower rate when we introduce new and better designs. This has been explained in the theory of momentum behavior.

For this reason, we always recommend testing new website designs with new visitors.

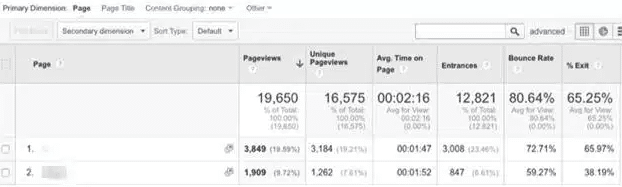

Before you test new designs, you need to assess how returning visitors interact with your website compared to new visitors. If you run Google Analytics, you can view visitor behavior by adding a visitor segment to most reports.

Let’s examine how visitors view different pages on your website.

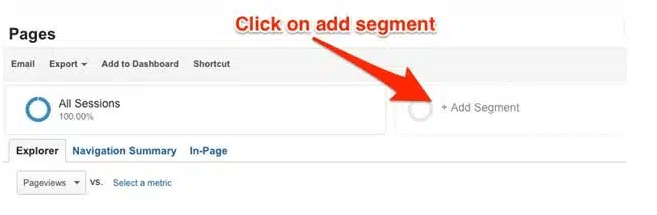

After you log in to Google Analytics, navigate to Behavior > Site Content > All Pages

Google will display the page report showing different metrics for your website.

To view how returning vs. new visitors interact with your website, apply segmentation to the report:

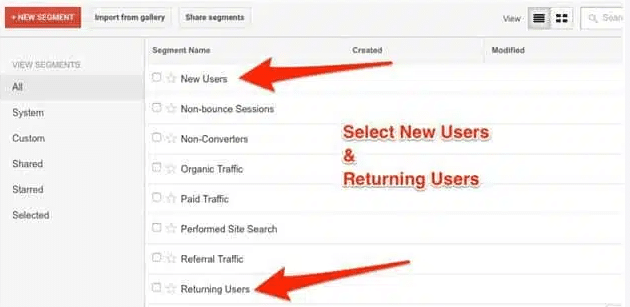

Select “New Users” and “Returning Users”

Google will now display the same report segmented by the type of user:

Notice the difference for this particular website in terms of bounce and exit rates for returning visitors compared to new visitors.

Most websites show anywhere from 15% to 30% difference in metrics between the two visitor segments.

If the difference in metrics between new and returning visitors is less than 10%, then you should scrutinize your design to understand why returning visitors react the same way as new visitors.

Next, create a test specifically for new visitors. Most split testing platforms will allow you to segment visitors.

If your testing software does not support this feature, then switch to something else!

7. Ignoring different traffic sources

Visitors land on your website from diverse traffic sources and mediums. You will notice that visitors from different sources interact with your site in different ways.

Trust is one of the first and most significant influences to whether a visitor is persuaded to convert on your website or not. One of the subelements of trust is continuity.

Continuity means you must maintain a consistent messaging and design from the traffic source/medium to the landing page.

Creating the same test for different traffic sources ignores that visitors might see different messaging or designs before landing on your website.

To assess how traffic sources can impact your website, follow these three steps:

- Understand how different traffic sources/mediums interact with your site

- Analyze reasons for different visitor interaction (if any)

- Create separate tests based on the traffic sources/mediums

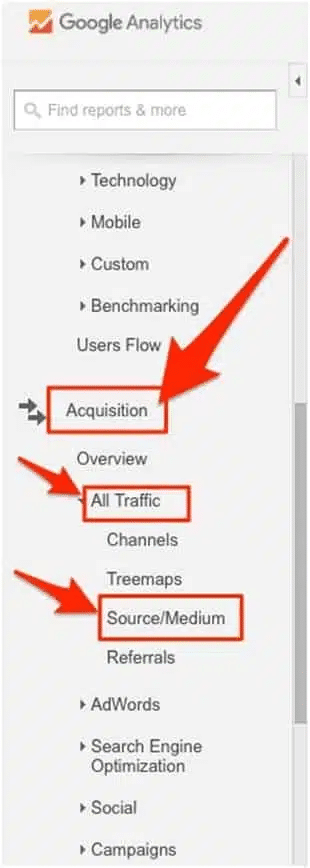

Let’s see how this is done in Google Analytics.

First, generate the traffic sources/mediums report. To do this, navigate to Acquisition > All Traffic > Sources/Mediums

Google will generate the report for you. Now, it is difficult to assess all your traffic sources. We recommend evaluating either your top ten or the traffic sources that drive more than 50,000 visitors to your website.

As you analyze the report, examine the following metrics:

- Bounce rate

- Exit rate

- Pages/Session

- The conversion rate for different goals

Ask questions such as:

- Which traffic sources are driving the highest conversions?

- Do you see high bounce rates for paid traffic?

- Which traffic sources are driving the lowest bounce and exit rates?

These steps help in determining if there is a difference in visitor behavior for different traffic sources.

Next, you need to determine the causes of such different behavior.

This will require examining each traffic source:

- What are visitors seeing before landing on your website?

- Do you have control over the display/messaging that visitors see before landing on the website?

- Can you change your landing page to maintain continuity in the experience?

8- Trying to do too much in one test

This is one of the mistakes we fell in during the first year we conducted A/B and MVT testing. Our clients wanted to see large-scale experiments. Small tests did not convince them. And instead of explaining to them what we were trying to accomplish, we created extensive tests where we changed too many elements at the same time.

Most of our testing produced excellent results.

As a matter of fact, in 2007, 82% of our testing generated an uplift in conversions and 78% of our test produced more than 12% increase in conversion rates.

These are amazing results. So, what was the problem?

Since we were making too many changes on a page for every test, we could not isolate what exactly was generating the uplift. So, our team could only make assumptions. Every test generated an increase in conversions due to seven to nine different factors.

This approach might be okay if you are looking to do two to three tests and get done with testing (we do not recommend it).

But if you are looking for a long-term testing program that takes a company from 2% to 9% conversion rate, that approach will undoubtedly fail.

Our approach twelve years later looks tremendously different. Our testing programs are laser sharp focused.

Every test we perform relies on a hypothesis. The hypothesis can introduce several changes at the same time to the page, but research backs all changes. A single hypothesis about enhancing social proof could be implemented by deploying a new headline, new image, and a new layout. Yes, there are several elements but they all support the overall hypothesis.

Let’s take a recent evaluation we did for a top IRCE 500 retailer. Their product pages suffered from high bounce rates. Visitors were clicking on PPC ads, getting to the product pages, checking out prices for different products, and then leaving. Visitors were price shopping.

Instead of doing a single test for the product pages, we did five rounds of testing:

- A split test focused on the value proposition of the website

- A split test focused on price-based incentives

- A split test focused on urgency-based incentives

- A split test focused on scarcity-based incentives

- A split test focused on social proof

What were the results of running these tests?

The website increased revenue (not conversions!) by over 18%.

9- Running A/B tests when you are not ready

Everyone is talking about A/B and multivariate testing. The idea of being able to increase your website revenue without having to drive more visitors to the website is fantastic.

But A/B testing might not work for every website. Certainly, multivariate testing is not for every site.

Testing might not work for you in two instances:

- when you do not have enough conversions

- when you do not have the mindset required to run a successful CRO program.

1. You do not have enough conversions

If you do not have enough traffic coming to your website or enough conversions, then split testing will not work for you.

A small A/B test that has one challenger to an original design requires that the particular page you plan to test to generate a minimum of 500 conversions per month. If that particular page is getting less than 500 conversions, then your tests might run for too long without concluding.

We typically do not start A/B testing with a client unless the website has 500 conversions per month. Our standard program requires that clients cross the 800 conversions per platform (desktop or mobile).

Multivariate testing requires more conversions and more traffic compared to AB testing. Do not consider MVT testing unless your website has 10,000 conversions per month.

2. You do not have the mindset needed to run a successful conversion program

While the first reason why a conversion program fails is straightforward to figure out (you look at your monthly conversions), the second problem is more difficult to assess.

The truth is that not every organization or business is ready for testing.

Testing requires you to admit that visitors may hate your existing website design.

Testing requires you to admit that some designs which you hate will generate more conversions/sales for you.

Testing requires surrendering the final design decision to your visitors.

At the surface, every business owner or top executive will say that they are focused on their revenue. But after running over 500+ conversion optimization projects with over 10,000+ spit tests in them, we can simply state that this is not the case.

We have seen business owners reject the results of testing that generated 32% uplift in conversions with 99% confidence because they liked the original design and could not bear the idea of changing it.

We have seen executives reject the results of testing that generated 25% uplift in conversions with 95% confidence because they hated the winning design.

We have also seen testing programs fail because, while the CEO of the company was committed to testing, the team was not sold on the idea.

For many companies, split testing requires a complete culture shift. To make sure its results will have a direct and significant impact on your bottom line, everyone – and we do mean everyone – must be completely committed to it.

10- Calling the test too soon

You run the test and, a few days later, your testing software declares a winning design. Everyone is excited about the uplift. You stop the test and make the winning challenger your default design.

You expect to see your conversion rate increase. But it does not.

Why?

Because the test was concluded too quickly. Most testing software declares winners after achieving a 95% confidence level regardless of the number of visitors and conversions reported for different variations.

Many split testing platforms do not take into account the number of conversions the original design and the variations recorded. If the test is allowed to run long enough, you will notice that the observed uplift slowly disappears.

So, how do you deal with this?

- Regardless of the setup in the split testing platform, make sure you adjust it to require a minimum of 500 conversions for the original design and the winning challenger.

- Run your tests for a minimum of seven days so that your test will run on every day of the week to account for fluctuations might happen between different days (We typically run tests for a minimum of two weeks depending on the business cycle for the particular business).

11- Calling the test too late

You have no control over external factors when you run a split test. These factors can pollute the results of your testing program.

There are three different categories of external factors that could impact your experiments:

- General market trends: a sudden downturn in the economy, for example

- Competitive factors: a competitor running a massive marketing campaign

- Traffic factors: change in the quality of organic or paid traffic

All of these factors could impact the results of your testing at no-fault of the testing program itself. For this reason, we highly recommend limiting the time span of any split test for no longer than 30 days.

We have seen companies require tests to run for two to three months trying to achieve confidence on a test. In the process, they pollute their testing data.

In fixed horizon testing, you should calculate the number of visitors required to go through the test before launching any experiment. In this approach, you will use your current conversion rate, significance level, and the expected uplift to determine required sample size.

A final note on significance levels: Remember that achieving 95%significance is not a goal set in stone. Significance levels provide a general trend line that the test results are positive and consistent.

12- When technology becomes a problem

The purpose of testing is to increase your conversion rates and your revenue. Developers sometimes struggle to focus on this fact, especially when they get fascinated by a particular piece of software that complicates test implementation.

As a goal, most split tests should not take longer than three to five days to implement.

Smaller tests, with few changes to a page, might take a few hours to implement.

You must keep in mind that the first two split tests you deploy on your website will take a little longer to implement as your development team gets used to the split testing platform you selected.

However, if you notice that, over a six-month period, all of your tests are taking over a week to ten days to implement, then you MUST assess the cause for the delay:

- Is the testing platform too complicated and an overkill for the type of testing you are doing?

- Is your website or application code not developed with proper standards which are causing the delays?

- Does your development team have a good handle on implementing tests or are they struggling with every test?

- Are you creating and deploying complicated tests that require significant time to develop?

Golden rule: A testing program should deploy two to six tests per month.

13- Running successful simultaneous split tests

Launching simultaneous split tests expedites your testing program. Instead of waiting for each test to conclude, you run several tests at the same time.

If you are running simultaneous split tests in separate swimlanes, then this will not cause you an issue.

However, if the same visitors are navigating through your website and seeing different tests, you could be cannibalizing your testing data.

Imagine the scenario of running a test on the homepage, with three challengers to the original design. A visitor might view any of the following configurations:

| H0 | Original homepage design |

| H1 | Challenger 1 |

| H2 | Challenger 2 |

| H3 | Challenger 3 |

At the same time, you run a test on the product pages, with two challengers to the original design. A visitor might view any of the following configurations:

| P0 | Original product design |

| P1 | Challenger 1 |

| P2 | Challenger 2 |

In this scenario, as the visitor navigates from the homepage to the product pages, he can see any of the following combinations of designs:

| 1 | H0, P0 | Original homepage, original product page |

| 2 | H0, P1 | Original home page, product page challenger 1 |

| 3 | H0, P2 | Original home page, product page challenger 2 |

| 4 | H1, P0 | Homepage challenger 1, original product page |

| 5 | H1, P1 | Homepage challenger 1, product page challenger 1 |

| 6 | H1, P2 | Homepage challenger 1, product page challenger 2 |

| 7 | H2, P0 | Homepage challenger 2, original product page |

| 8 | H2, P1 | Homepage challenger 2, product page challenger 1 |

| 9 | H2, P2 | Homepage challenger 2, product page challenger 2 |

| 10 | H3, P0 | Homepage challenger 3, original product page |

| 11 | H3, P1 | Homepage challenger 3, product page challenger 1 |

| 12 | H3, P2 | Homepage challenger 3, product page challenger 2 |

Your two separate and straightforward tests end up impacting each other.

So, does that mean that you should never run simultaneous split tests?

No, we run split tests simultaneously on projects with the following guidelines:

- we target different traffic and device types to split the traffic.

- After we declare a winner for a test, we run the winner against the control in a head-to-head match in a period where no other tests are running.

14- Missing the insights

The real impact of conversion optimization takes place after you conclude each of your split tests. This impact is by no means limited to an increase in conversion rates.

Yes, seeing an increase in conversion rates is fantastic!

But there is a secret to amplifying the results of any test by deploying the marketing lessons you learned across different channels and verticals.

Let’s put this in perspective.

We worked with the largest satellite provider in North America helping them tests different landing pages for their PPC campaign. The testing program was very successful, generating significant increases in conversion rates.

As we implemented the testing program, their digital marketing director asked if we could use the same lessons we learned from split testing in their newspaper advertising.

This was a new challenge.

Would offline buyers react the same way to advertising as online buyers?

There was only one way to find out. We had to test it.

Each test was built using a hypothesis. We applied that hypothesis to both online tests as well as the newspaper advertising. We ran three different tests both online and offline.

Each test generated an uplift in conversions in both online and offline.

But things did not stop there.

We then applied the same lessons to mailers which the company sent to residential addresses.

Again, we saw uplifts in conversions.

If you follow a conversion optimization methodology, then you will be able to take lessons from your testing and apply them again and again.

15- Your follow up tests

Not every test will generate an increase in conversions. As you conclude your experiments, you will have to decide on the next steps for each test. I have seen clients and teams get frustrated because they are not able to increase conversions on a particular page. So, they move on to other sections on the website.

The post-test analysis should determine one of four possible next steps:

- Iterate on the test: conduct further tests on the page, fine-tuning the test design or trying different implementation to the original hypothesis.

- Test new research opportunities: test results point to a new hypothesis that should be tested on the page.

- Investigate further: test results show that you need to dig for deeper insights before you determine next steps.

- Pivot: Your testing data and analysis clearly indicate that you had the wrong hypothesis to start with. Time to look for other issues on the page.

16- Not documenting everything

A conversion optimization program is documentation intensive. You should record every little detail.

You must document:

- Your qualitative analysis research and findings

- Your quantitative analysis and conclusions

- Every page analysis you conduct

- Every hypothesis you make

- Screen captures of every design you deploy

- Testing data

- Post-test analysis

Many companies do not pay close attention to the importance of documentation. They discover its importance when they come back to the testing results a few months later. When there is no documentation or when it is scattered in across multiple emails, they struggle to remember why they made a particular change. But it is too late.

From the very start of any CRO project, decide on the method you will use to document everything and use it thoroughly.